Cumulative Reasoning

TLDR: We introduce Cumulative Reasoning (CR) that enhances LLMs' problem-solving abilities by orchestrating an iterative and compositional process involving different roles, demonstrating superior performance across a range of complex tasks.

CR introduces a novel framework leveraging three specialized types of Large Language Models (LLMs) in a collaborative reasoning process:

1. Proposer: Suggests potential steps based on the current context, initiating the reasoning cycle.

2. Verifier(s): Assess the proposer's suggestions for accuracy, incorporating valid steps into the ongoing context.

3. Reporter: Determines the appropriate moment to conclude the reasoning process, based on whether the accumulated context leads to a definitive solution.

Framework Overview

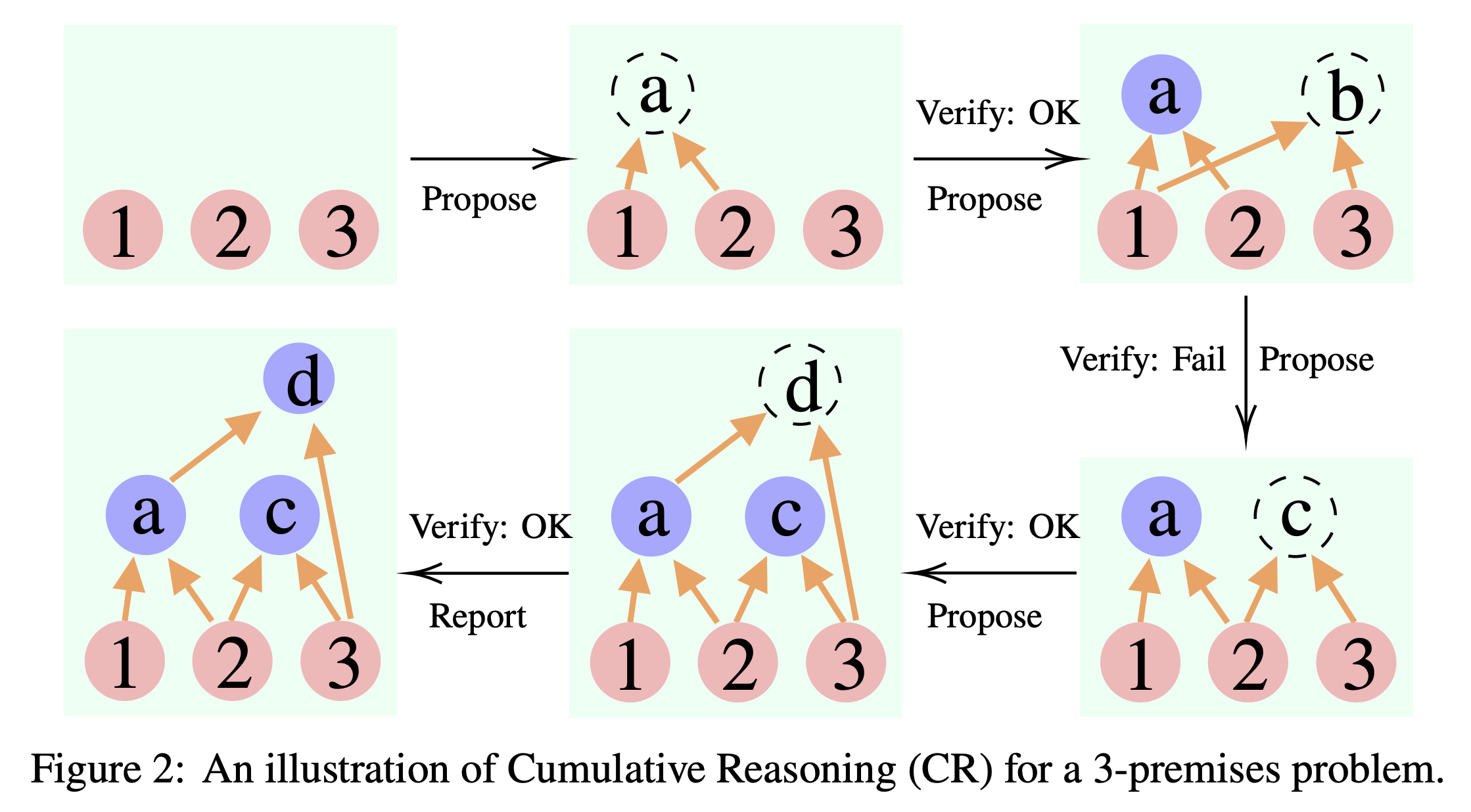

Our approach is visualized in Figure 2, illustrating how CR iteratively constructs and refines a solution from initial propositions to a final conclusion. In practical terms, the proposer is ideally a model pre-trained on related derivation tasks, while verifiers translate these proposals into formal systems for validation, employing either symbolic reasoning systems or incorporating a code environment.

While specialized models offer optimal performance, the flexibility of CR permits effective deployment using general-purpose LLMs like GPT-4, tailored through role-specific prompting. Notice that in our method, we introduced several different LLMs with fresh eyes by managing the thinking context of each role, beyond the self-verification capabilities of language models.

The underlying rationale for CR draws from intuitionistic logic and the philosophy of mathematical constructivism—asserting that a cumulative, constructive approach is inherently suited for complex reasoning tasks. This methodology not only allows for the dynamic adjustment of the reasoning trajectory based on intermediate validations but also significantly enhances the problem-solving efficacy of LLMs.